Ranking Astronomers

If you want to fire up astronomers (and scientists in general), start discussing the topics of research impact and research metrics. These are the buzz words at the moment, as governments around the world are carrying out assessment of research done with public funds. Here in Australia we are in the current round of The Excellence in Research for Australia, where research in universities is scored on a scale which compares it to international standards.

I could write pages on attitudes to such exercises, and what it means, but I do note that the number of papers an academic has was a factor considered in a recent round of redundancies at the University of Sydney. But what I will focus upon is an individual ranking, the h-index.

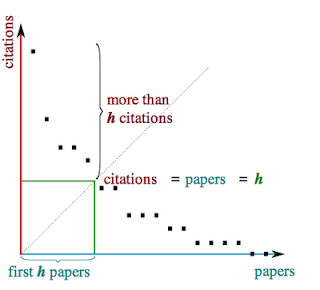

You can read the details of the h-index at wikipedia, but simply put, take all the papers written by an academic, and find out how many times each of them has been cited. Order the papers from the most cited to the least, and where the number of the paper (going down the list) matches the number of citations, then this is the academics h-index. Here's the piccy from wikipedia:

If you check me out at Google Scholar, I have an h-index of 49, which means I have 49 papers with at least 49 citations. Things like Google Scholar, and the older (but still absolutely excellent) Astrophysical Data Service, make calculating the h-index for astronomers (and even academics in general) extremely easy. The result is that people now write papers about people h-indicies, papers like this one which ranks Australian astronomers in terms of their output over particular periods.

Now, a quick google search will turn up a bucket load of articles for and against the h-index. There are a lot of complaints about the h-index, that it does not take things like the field of research, or number of authors, or the time needed to build up citations (slow cooker research which is not recognised at the time, but becomes influential after a long period, sometimes after the researcher has died!).

Others seem to have a bit of a dirty feeling about the h-index, that ranking astronomers and research is somewhat below being an academic, especially things like producing league tables as in the paper above.

The problem is that, in reality, scientists are ranked all the time, be it for grant applications, telescope time, jobs etc. and in all of these, it is necessary to compare one researcher to another. Such comparisons can be very difficult. When faced with a mountain of CVs, with publication records as long as your arm, grand success and even outside interests (why, oh why, in job applications do people feel it is necessary to tell me they like socialising and reading fantasy books??), it can be hard to compare John Smith with Jayne Smythe.

This is why I am a fan of the h-index.

But let's be clear why and how. I know the age-old statement that "Past performance is not an indicator of future success" but when hiring someone, or allocating grant money or telescope time, people are implicitly looking at a return on their investment, they want to see success. And to judge that, you need to look at peoples' past record and extrapolate into the future. If Joe Blogs has received several grants in the past and nothing has come of it, then do you really want to give them more money? And what if they received quantities of telescope time and never publish the results? Is it a good idea to give them more time?

But the stakes are higher. "Impact" is key, and one view of impact is that your research is read, and more importantly cited, by other academics around the world. What if Joe is a prolific publisher, and all of his papers appear in the Bulgarian Journal of Moon Rocks, with no evidence that anyone is reading his papers? Do you want to fund him to write more papers that no-one is going to read?

Now, some will say that academic freedom means that Joe should be free to work on whatever he like, and I agree that this is true. But as the opening of this post pointed out, governments, and hence universities, are assessing research and funding, and this assessment wants to see "dollars = impact".

So, when looking at applications, be it for a job or grants, then the h-index is a good place to start; does this researcher have a track record of publishing work that is cited by others? Especially for jobs, it appears that the h-index actually has some predictive powers (although I know this is not globally true, as I know a few early hot-shots that fell off the table).

But let me stress, the h-index is a good place to start, not end, the process.

I agree with Bryan Gaensler's statement that we should "Reward ideas, not CVs", and the next "big thing" might come from left-field, from a little known researcher who has yet to establish themselves, but realistically a research portfolio should be a mix of ventures; research that is guaranteed to produce solid results, with some risky ideas that might pay off big time, and we have to judge that by looking at the research proposal as a whole (and I think this should be true for an individual's research portfolio, or a department, or even a country).

Anyway, professional academics know that they are being assessed and ranked, and know that those that count beans are watching. I know there are a myriad of potential metrics that one can use to assess a researcher (and funnily enough, many researchers like the one that they look good in :), and I also know that you should look at the whole package when assessing the future potential of a researcher.

But the h-index is a good place to start.

But let me stress, the h-index is a good place to start, not end, the process.

I agree with Bryan Gaensler's statement that we should "Reward ideas, not CVs", and the next "big thing" might come from left-field, from a little known researcher who has yet to establish themselves, but realistically a research portfolio should be a mix of ventures; research that is guaranteed to produce solid results, with some risky ideas that might pay off big time, and we have to judge that by looking at the research proposal as a whole (and I think this should be true for an individual's research portfolio, or a department, or even a country).

Anyway, professional academics know that they are being assessed and ranked, and know that those that count beans are watching. I know there are a myriad of potential metrics that one can use to assess a researcher (and funnily enough, many researchers like the one that they look good in :), and I also know that you should look at the whole package when assessing the future potential of a researcher.

But the h-index is a good place to start.

ReplyDeleteBut let's be clear why and how. I know the age-old statement that "Past performance is not an indicator of future success" but when hiring someone, or allocating grant money or telescope time, people are implicitly looking at a return on their investment, they want to see success.

Past performance can be used as a proxy to estimate future success. That's fine as far as it goes, but doesn't go far enough. People shouldn't be hired as a reward for past accomplishments, but rather because they will do good stuff in the future. In practice, someone who was, say, a Hubble Fellow will probably make the short list automatically, while others will be scrutinized more. However, one should actually demand more of the Hubble Fellow, because he had a better environment in which to work than the typical post-doc. In practice, people probably demand less. The other side of the coin is that someone who hasn't published as much due to circumstances beyond his control doesn't rank as high. One should factor in the difficulty of his situation---not out of sympathy, but because in the future the working conditions of A or B will be essentially the same, since they are applying for the same job. So the person doing the hiring should, in his own interest, multiply the h-index, or whatever, by the difficulty of the candidate's situation. (Yes, someone who was a Hubble Fellow presumably got it because he was good (though of course sometimes other factors play a role in hiring), but he has already been rewarded for that, so to speak, by the Hubble Fellowship itself. If this is an automatic passage to the next job and so on, then all one has to do is land a good job at the beginning of one's career and then coast from there, which is especially dangerous if the first good job was not awarded entirely on the basis of qualifications. But even if it were, people can burn out etc.)

The h-index is probably better than counting number of papers, or number of citations, and most objections to it apply to bibliometry in general. However, I think that one should take into account the number of authors. Two candidates with the same h-index (and let's say same number of papers, same total number of citations etc) are certainly not comparable if A's papers are all single-author papers and B's are papers with several authors. So, divide the number of citations of a paper by the number of authors before calculating the h-index.

I think that, if one wants to use one number, the g-index is a bit better than the h-index.

The biggest problem in bibliometry, though, is probably the fact that the criteria for becoming an author vary from place to place and even within an institution. In other words, the amount of effort required to move from the acknowledgements to co-author status varies extremely widely.